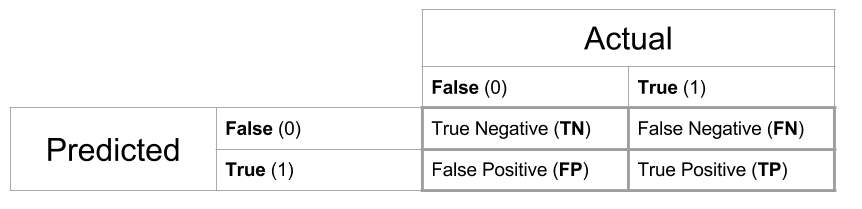

Accuracy: Overall, how often is the classifier correct?.This is a list of rates that are often computed from a confusion matrix for a binary classifier: I've added these terms to the confusion matrix, and also added the row and column totals: false negatives (FN): We predicted no, but they actually do have the disease.

false positives (FP): We predicted yes, but they don't actually have the disease.true negatives (TN): We predicted no, and they don't have the disease.true positives (TP): These are cases in which we predicted yes (they have the disease), and they do have the disease.Let's now define the most basic terms, which are whole numbers (not rates): In reality, 105 patients in the sample have the disease, and 60 patients do not.

The classifier made a total of 165 predictions (e.g., 165 patients were being tested for the presence of that disease).If we were predicting the presence of a disease, for example, "yes" would mean they have the disease, and "no" would mean they don't have the disease. There are two possible predicted classes: "yes" and "no".Let's start with an example confusion matrix for a binary classifier (though it can easily be extended to the case of more than two classes): I wanted to create a "quick reference guide" for confusion matrix terminology because I couldn't find an existing resource that suited my requirements: compact in presentation, using numbers instead of arbitrary variables, and explained both in terms of formulas and sentences. The confusion matrix itself is relatively simple to understand, but the related terminology can be confusing. machine learning Simple guide to confusion matrix terminologyĪ confusion matrix is a table that is often used to describe the performance of a classification model (or "classifier") on a set of test data for which the true values are known.The former is compute by pooling while the resampling results average 10 different accuracy numbers. Note that the pooled version of the confusion matrix will not give you the same answers as the resampled accuracy statistics. # Get a confusion matrix by pooling the out-of-sample predictionsĬonfusionMatrix(lda_auto_10cv$pred$pred, lda_auto_10cv$pred$obs) #> (entries are percentual average cell counts across resamples) library(ISLR)Īge[Auto$year Cross-Validated (10 fold) Confusion Matrix Ĭreated on by the reprex package (v0.3.0)

#create vector and add as new column to the Auto data frameĪge[Auto$year Linear Discriminant Analysis Any ideas? Here's the code: library(ISLR) Ideally I want something similar to the argument "CV=TRUE" in the MASS::function, but instead of doing LOOCV, I want to use k-fold CV. I understand from the function trainControl that if classProbs=TRUE, the method will return class probabilities and assigned class, but I can't seem to find what I want. So now I just want to perform LDA using 10-fold CV. On my constant messing around with R, I have created a new variable called "age" in the Auto data frame in order to predict whether the car can be classified as "old" or "new" if the year of a given observation is below or above the median for the variable "year". I need help with the caret::train function.

0 kommentar(er)

0 kommentar(er)